Dan Bricklin's Web Site: www.bricklin.com

|

|

How HTML wins

HTML became the dominant way to deliver applications to the desktop. What is needed for that to happen in the mobile world? Looking at history, I believe that it will be the availability of easy to use authoring.

|

|

There are so many articles saying that HTML5 is the way to go for mobile development, yet you still see so many apps being written in native code. What will it take to finally make HTML be as dominant on mobile as it is on the desktop? Does it just need a more complete JavaScript framework? What about easier connection of JavaScript to a SaaS database? What about another way for JavaScript to access the raw hardware?

In this essay I look at the history that led to HTML's dominance and try to learn from that.

Humble beginnings

In the mid-1990's, Microsoft Windows was king of computing. Windows 95 came out to great hoopla in August 1995. C++ was big for development, with the MFC framework a big help for developing native applications. Visual Basic was well established for creating apps, too. PowerSoft's PowerBuilder development environment for business applications that could do database create, read, update, and delete operations ("CRUD" operations) was sold to Sybase for nearly $1B in 1995. AOL was mailing (physical mail) millions of floppies and CDs to everybody with its online access program ready to install. Traditionally programmed applications were dominant.

HTML and the World Wide Web started in the early 1990's. Version 1.0 of the first popular, dominant commercial browser, Netscape Navigator, was released in December 1994. It supported very simple HTML, which included simple styling and simple forms for data entry. Later versions in 1995 added tables, cookies, and very early JavaScript. Microsoft released its first browser in 1995.

At this point, extremely rich applications could be written in C++, Visual Basic, or any of many programming systems. HTML was very lean, with very basic data display and entry. It was clear which was better. (To see how primitive HTML and browsing was around then, see "Video of Bob Frankston's tour of the WWW in 1994".)

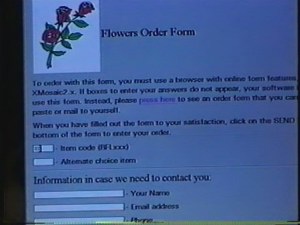

Online commerce in a browser in 1994 (from the video)

However, a strange thing happened. HTML kept getting better, though always behind when compared to what you could do natively. But it also became more and more popular. More and people wrote in it.

HTML was a very simple language. It could be mastered by people who were not good programmers -- or even programmers at all. You could make something that looked "OK" pretty quickly. Using a web server for distribution, you could provide any number of applications to others without installation if they already had a browser.

The change that made HTML take off: Easy authoring

And then another thing happened. WYSIWYG editors for creating HTML came out. Microsoft Word had a free add-in, from Microsoft, that could turn Word documents into an HTML facsimile. FrontPage was launched by Vermeer Technologies in 1995 and acquired by Microsoft in 1996. It and other authoring systems make it even easier to create and maintain HTML pages with all the trimmings, including some server-side support.

Simple programming systems, like those using the Perl language for CGI with a server, with their ability to do database "CRUD" operations, together with these authoring systems made it quite easy to build business applications around HTML.

The entrance of Allaire's ColdFusion database-driven content server in 1995 made it even easier and fueled further moves to HTML-based business applications. If you could write HTML you could probably learn the little more it took to use ColdFusion.

Over the next few years, the use of HTML and the web exploded. The "open" specification of HTML and HTTP, open languages like Perl and PHP, together with lots of proprietary authoring systems like FrontPage, Dreamweaver, and others, helped fuel this growth. (I helped lead a company, Trellix Corporation, that first made a non-HTML system that was quickly morphed into an HTML one. I still use a Trellix tool to write what you are reading here on my web site.) Despite "native applications" being "better" from a visual, and even, in some cases, functional level, HTML became dominant on the desktop except in certain "power" applications. The advent of advances in JavaScript and CSS, including the technologies involved in Ajax, helped cement this situation through the last decade.

There are two things about HTML that I want to point out:

First, compared to other forms of "programming", HTML is easier to learn and use for many people. Application development can be more efficient compared to native code. This is especially true for custom applications that are very niche oriented and which don't warrant the time and effort needed by the traditional programming methods.

Second, HTML is relatively standard, and works on a wide variety of target devices. PC, Mac, Unix, and Linux could all read the same web pages and access the same applications. "Write Once, Run Everywhere" was pretty much true. Nothing stopped an existing or a new platform from supporting HTML browsing and every one did.

While being open on the client side was a key attribute of HTML and its success, being open on the authoring or serving side was not a requirement (most authoring tools during this phase were proprietary software, and many serving systems were also at least partially proprietary).

The mobile era

We are now in the mobile era. The "post PC" era. Even new PCs now have touch screens. What about HTML vs. native code? It seems that for most modern-style, touch-enabled applications, system-specific native code is dominant. Is that the way it will be, or will open HTML (with CSS and JavaScript) eventually become the dominant technology for building business applications like it has on the desktop?

Right now, it seems that most apps are native, and not accessed through a browser. Part of the reason for this is that the browser itself gets in the way, both because of the "chrome" around the content area and because of the scrolling and page navigation behavior that can conflict with an app's UI. However, if you access HTML with something other than the built-in browsers, such as a "wrapper" app that just includes a Web View of some sort (UIWebView in iOS, WebView in Android), then those problems go away. In fact, quite a few of the apps in the Apple App Store, and on other platforms, are in large part built that way with either static HTML content included in the app, web-format data retrieved while running from the Internet, or both.

HTML and its related technologies of JavaScript and CSS have provided the basis on which a lot of the content of non-browser-based apps is displayed. Through iOS 6 (we'll see what happens in iOS 7 and beyond depending upon what Apple adds to the iOS SDK), rich text and complex, dynamic layouts are very hard to program natively for iPhones and iPads. The main "simple" way is to use a UIWebView to display HTML.

Hybrid systems like PhoneGap/Cordova (see "An Overview of HTML 5, PhoneGap, and Mobile Apps") let a developer write in relatively complex JavaScript, CSS3, and HTML to produce "normal" looking and behaving apps. Apps created this way are very common and have made it easier for developers to "write once and run multiple places" with their apps.

What's missing?

What we are missing, though, are the easy to use, non-coding systems to let a wider range of people develop and deploy apps for mobile devices. Systems that hide most of the coding and let you concentrate on the UI elements and "business logic" are just starting to emerge. There are those that create HTML5 apps (HTML5 is often the shorthand way of saying recent HTML, JavaScript, CSS3 and related technologies and not just that version of HTML itself). I believe that it will take such "quick and easy for the masses" systems to push HTML5 to the fore in mobile the way that HTML overtook native coding on the desktop. (That is why I joined a company, Alpha Software, that just came out with one such development system, Alpha Anywhere-- see "Why Alpha Anywhere matters".)

Other than HTML5, I don't see any other set of open technologies that will let this happen. As before, you need open technologies for the execution on the device (like HTML was on the desktop) and a mixture of open and proprietary authoring systems and serving technology for the provider of the apps.

Is this really a common scenario, where "quick and easy for the masses" leads to adoption, or is it only for the desktop web?

This scenario, where an easier to use system, that lets "the masses" concentrate on the problems they want to solve and not on line-by-line coding in a language like Objective-C, Java, or JavaScript, leads to a growth in use of an area of computing, happens over and over again in the world of computing. The definition of "the masses" changes, but it is always a lot more than those who created the applications before. Years ago, in my "Why Johnny can't program" essay, I wrote about the fact that by moving from statement-based languages to more declarative or WYSIWYG systems you empower a wider range of people.

For example, in the early 1950's programming computers was done in machine code or very early assemblers. Only very specially trained people with the appropriate aptitude could write those programs. Along came FORTRAN (from the words "Formula Translation") that converted a more math-oriented "language" into instructions the computer could execute. Suddenly, large numbers of scientists, mathematicians, and economists could make use of computers. Starting in the early 1960's, COBOL (the "Common Business-Oriented Language") empowered people who could apply computers to business tasks. While these were statement-oriented languages, they were much more approachable than machine code and were aimed at a particular segment of users. They often also let you solve the problem on one system and move that solution to other computers without rewriting. Even the "C" language appealed more to system programmers than the assembly languages used before. The BASIC language (developed at Dartmouth College) was made very simple, interactive, and forgiving and appealed to less technical people and opened up computing to others (the "B" stands for "beginner's").

In the PC era, electronic spreadsheets opened up computing to the masses of business people who otherwise would have been stopped by the difficulty and tediousness of programming in even the simple BASIC language. Word processing was so much easier than the mark-up languages used in computerized typesetting and such before and opened up that use of computing. Code-less or code-optional systems like Filemaker, Alpha Four, and later Microsoft Access opened up database systems on PCs to hundreds of thousands or millions of people who were unable to write programs in Basic, DBase, or other more complex systems.

So, this situation of authoring systems that cater to the needs of "domain experts" rather professional programmers leading to large increases in custom computer applications is the common case, not the special case. (A "domain expert" is a person who wants to know how to solve problems in a particular problem domain as opposed to the "professional programmer" who identifies more with learning computer languages and other computer technologies.)

What does this mean for Apple?

At this point, the largest number of mobile apps are written in Objective-C for Apple's iOS. The availability of all of these device-specific apps is felt to be of particular benefit to Apple and a problem for competitors. How would the rise of HTML-based mobile apps affect Apple?

I think that it would not be bad for Apple and perhaps very good. Historically, very strong support of HTML helped make iOS devices popular. The fact that you could successfully display almost any web site, even those with complex CSS and JavaScript, and navigate so easily with zooming and panning, was, and continues to be, a major selling point for iOS devices. Apple's devices are frequently measured against competitors by how fast and faithfully they handle web sites. Other than the issues that arose over Adobe Flash content, Apple has been a leader in this field with its support of the Webkit browser engine. In fact, in the first release of the iPhone, HTML-based apps were supposed to be the only non-Apple apps allowed and Apple added various features to the mobile Safari browser to help make that possible.

The availability of many new apps made possible by more widespread authoring will only increase the value of iOS devices. It will spur their use which will continue fueling the purchases. Apple has always competed well on their hardware and other aspects of product.

In addition, compatibility is a two-way street. HTML-based apps that a company makes for its own internal use that run on devices from other manufacturers, perhaps less expensive or more rugged than Apple's, will still be able to run on Apple devices, and make the Apple devices more likely to be included in "approved" lists.

So, I don't see success of HTML on mobile devices as being a major problem for Apple. There will always be a large number of developers who will develop specifically for Apple's platform. Apple's operating systems have been very enticing for developers that are able to code specifically for them, and from what they are showing publicly of iOS 7, there is no indication that that is abating. However, the unscheduled expense of needing to retool native code Objective-C apps for iOS 7 is probably making many companies look more closely at HTML-based development.

-Dan Bricklin, 1 July 2013

|

|

|

© Copyright 1999-2018 by Daniel Bricklin

All Rights Reserved.

|